4 Support Vector Regression

4.1 Introduction

In this section, the methodology Support Vector Regression (SVR) is applied for travel-time prediction. SVR is a computational technique that has its root on machine learning (ML) methodologies.

The feasibility of applying SVM in travel-time prediction is demonstrated in this section, the results and analysis are further presented.

4.2 Method Description

SVR is an extension of ML technique known as support vector machine (SVM) to regression problems. SVM makes use of a hypothesis space of linear functions in a feature space, trained with a learning algorithm from optimisation theory. An important aspect of SVM is that not all the available training examples are used in the training algorithm. The subset of points that lies on the margin, called support vectors are the only ones that defines the hyperplane of maximin margin.

SVMs techniques are grounded in the basic principle that for a given learning task, with a given finite number of training data, the best generalization performance will be achieved if the right balance is struck between the accuracy attained and the capacity of the machine to learn any training set without error (Burges 1998).11 Figure 4.1 presents the classic example of linear support vector machine.

![Linear support vector machine *[source: @R-burges]*](Images/SVR.png)

Figure 4.1: Linear support vector machine (source: Burges 1998)

SVM algorithms use a set of kernel functions. The function of kernel is to take data as input and transform it into the required form. Kernel methods in ML are used in a different way comparing to spatial analysis (Kernel Density Estimation, KDE). Instead of being applied to spatial distances, they are applied to distances between sets of variables. Different SVM algorithms use different types of kernel functions, for example linear, nonlinear, polynomial, radial basis function (RBF), and sigmoid. Figure 4.2 presents the basic scheme of kernel function method applied to ML.

![Kernel to machine learning *[source: @R-cheng2]*](Images/kernel.png)

Figure 4.2: Kernel to machine learning (source: Cheng and Haworth 2019)

A kernel function is usually used to refer to the kernel trick, a method of using a linear classifier to solve a non-linear problem. It entails transforming linearly inseparable data to linearly separable ones. Machine learning methods are widely applied to classification and regression problems. The best-known kernel method for regression is support vector regression (SVR), which is based on the principles of statistical learning theory (Cortes and Vapnik 1995). Kernel methods convert linear algorithms for use on nonlinear data by projecting the input data into a high dimensional feature space in which a linear solution is found.

4.2.1 SVR to Predict Travel Time for Urban Road

The idea of the regression problem is to determine a function that can approximate (or predict) future values accurately. The generic SVR estimating function takes the form:

\[\begin{equation} \operatorname {f(x)} = (w.\Phi(x)) = b \tag{4.1} \end{equation}\]Where \(w\subset R^{n}\), \(b\subset R\) and \(\Phi\) denotes a nonlinear transformation from \(R^{n}\), to high-dimensional space. The kernel function applied to the study is the Gaussian radial basis function (RBF):

\[\begin{equation} \displaystyle K(\mathbf {x_i} ,\mathbf {y_i} )=\exp \left(-{\frac {\|\mathbf {x_i} -\mathbf {y_i} \|^{2}}{2\sigma ^{2}}}\right) \tag{4.2} \end{equation}\]Where \(\sigma > 0\) is the kernel bandwidth.

SVR tries to find a function \(f(x)\) where the predicted values are at most \(\in\) from the observed values \(y_i\), fitting inside a tube of width \(2\in\).

![SVR predictive function*[source: @R-wu]*](Images/svr_tube.png)

Figure 4.3: SVR predictive function(source: Wu and Lee 2004)

where \(x_i\) is a training sample with target value \(y_i\). The inner product plus intercept \(\langle w, x_i \rangle + b\) is the prediction for that sample, and \(\in\) is a free parameter that serves as a threshold. All predictions have to be within a \(\in\) range of the true predictions. Slack variables are usually added into the above to allow for errors and approximation in the case the problem is infeasible.

To train the SVR models, a better classification could be achieved by optimizing the parameters in some way. There are two parameters to train: the kernel parameter sigma and the constant c. The former is set to automatic by default. The constant C determines the amount of allowable error in the model, controlling the trade-off between model complexity and error. The value of c is not estimated and is set to 1. A large c assigns higher penalties to errors so that the regression is trained to minimize error with lower generalization, while a small c assigns fewer penalties to errors.

Allowing a large number of errors, the model would be less complex, thus providing higher generalization ability. On the other hand, If c is set with a high number, SVR would not allow the occurrence of any error and results would lead to a complex model. Therefore, it is worthwhile testing some values of c to see how they affect the results. Finally, epsilon determines the width of the tube.

4.3 The Modeling and Solving Approach

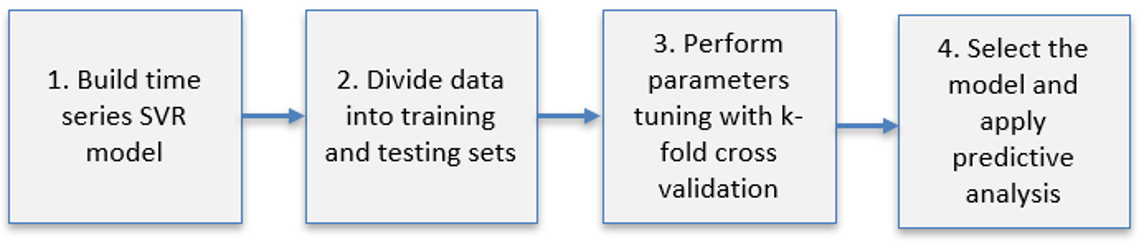

The first part of the experiment is to train the dataset. The data have been divided into training (80%) and testing (20%) data. Four road links have been aleatory chosen to train the model: IDs 2112, 2061, 473 and 2087. The strategy applied to the model training procedure is composed of four steps presented on Figure 4.4.

Figure 4.4: Model training procedure steps.

To train the data, the package called caret (short for classification and regression training) has been used. The library provides a set of functions that attempt to streamline the process for creating predictive models.12

Step 1: Build a time series SVR Model

The first step is to rearrange the series in order to build a time series SVR model by means of a temporal autoregressive structure. The moving average uses past forecast errors to forecast future values of the series.

load("forecasting.RData") #first load the data to the space environement

library(kernlab) # install if necessary using 'install.packages'

library(caret) # install if necessary using 'install.packages'To build the model, we will use a temporal autoregressive structure. We use embed() function to rearrange the series. This means we will forecast the future TT at a single location (link=2112) as a function of a subset of previous TT observations.

The resulting matrix can be used for one step ahead forecasting by using the first three columns as the independent variables and the fourth column as the dependent variable. In order to perform parameters tuning with k-fold cross-validation, three different embedding dimensions q have been applied: q=3: q=4 and q=5.

We will use the embed() to rearrange the series in this way:

# embed: function to rearrange the series

m <- 3

data.2112 <- embed(x = UJT [,"2112"], dimension = m+1)

colnames(data.2112) <- c("y_t-3","y_t-2","y_t-1","y_t")The function embeds the time series x into a low-dimensional euclidean space. This way, it is possible to forecast the future temperature as a function of a subset of previous temperature observations. Each row of the resulting matrix consists of sequences \(x[t], x[t-1], ..., x[t-dimension+1]\), where \(t\) is the original index of \(x\). If \(x\) is a matrix, (that is \(x\) contains more than one variable) then \(x[t]\) consists of the observation on each variable.

The resulting matrix can be inspected with the funcion head () which returns the first or last parts of the matrix. You will see that each row contains four observations, which are four consecutive TT observations. The first row of the matrix contains the first four observations from UJT[,"2112"] in reverse order ( for example column 4 is the first observation and column 1 is the 4th observation). In the second row, the first observation is removed from column 4 and the 5th observation is added to column 1. This matrix can be used for one step ahead forecasting by using the first three columns as the independent variables and the fourth column as the dependent variable.

# Returns the first or last parts of a vector, matrix, table, data frame or function

head (data.2112) ## y_t-3 y_t-2 y_t-1 y_t

## [1,] 0.1212617 0.1152238 0.1101922 0.09610367

## [2,] 0.1207585 0.1212617 0.1152238 0.11019217

## [3,] 0.1061669 0.1207585 0.1212617 0.11522378

## [4,] 0.1111985 0.1061669 0.1207585 0.12126170

## [5,] 0.1368597 0.1111985 0.1061669 0.12075854

## [6,] 0.1157269 0.1368597 0.1111985 0.10616689The same procedure could be applied to the other dimensions q=4 and q=5.

m <- 4

data.2112_d2 <- embed(x = UJT [,"2112"], dimension = m+1)

colnames(data.2112_d2) <- c("y_t-4" , "y_t-3","y_t-2","y_t-1","y_t")

m <- 5

data.2112_d3 <- embed(x = UJT [,"2112"], dimension = m+1)

colnames(data.2112_d3) <- c("y_t-5" , "y_t-4" , "y_t-3","y_t-2","y_t-1","y_t")Step 2: Divide data into training and testing sets

The next step is to divide the embedded data into training and testing sets. The subset of training data is used to fit the model and the testing set to estimate model fitness. It should be noted that in temporal data there is a continuity component whereas it is important to take into account the ordering of time.

That said, based on a continuous data series, the first 80% data of the months have to be selected to train the model and the remaining 20% data has been selected to test the model. Hence, the particular training set \(S= {(X_i,y_i), …,(X_i,y_i)}\) has been drawn from the distribution of the random vectors \(y\) and \(X\).

# divide data into training and testing sets - Dimension 1

split <- 4143 # UJT row to separe training and testing data

yTrain <- data.2112 [1:split,1]

XTrain <- data.2112 [1:split,-1]

yTest <- data.2112 [(split+1):nrow(data.2112 ),1]

XTest <- data.2112 [(split+1):nrow(data.2112 ),-1]

# divide data into training and testing sets - Dimension 2

split_d2 <- 4143 #UJT row to separe training and testing data

yTrain_d2 <- data.2112_d2 [1:split,1]

XTrain_d2 <- data.2112_d2 [1:split,-1]

yTest_d2 <- data.2112_d2 [(split+1):nrow(data.2112_d2 ),1]

XTest_d2 <- data.2112_d2 [(split+1):nrow(data.2112_d2 ),-1]

# divide data into training and testing sets - Dimension 3

split_d3 <- 4143 # UJT row to separe training and testing data

yTrain_d3 <- data.2112_d3 [1:split,1]

XTrain_d3 <- data.2112_d3 [1:split,-1]

yTest_d3 <- data.2112_d3 [(split+1):nrow(data.2112_d3 ),1]

XTest_d3 <- data.2112_d3 [(split+1):nrow(data.2112_d3 ),-1]Step 3. Perform parameters tuning with k-fold cross validation

To determine the values of the tuning parameters, one approach is to use a resampling to estimate how well the model performs on the training set. There are different types of resampling methods being k-fold cross-validation one of the most common types13. The process must be repeated many times and the performance estimates from each holdout set are averaged into a final overall estimate of model efficacy such that given the training set, the algorithm produces a prediction function \(f(x)= Փ(x_i)\). For each parameter combination the model fitness is estimated via resampling and the relationship between the tuning parameters and the model performance is evaluated.

The k-fold cross-validation has been applied as resampling method with \(k\) set to 5. In k-fold cross validation, the data are randomly partitioned into k-folds. Each fold is left out in turn and the remaining \(k-1\) folds are used to train a model and predict its values. The selected model is the one with the best average performance across the k folds14. The procedure prevents overfitting to a subset of the training data.

The caret package is used to perform the cross validation with a radial basis function kernel applied for parameter tuning. There are two tuning parameters: the radial basis function scale parameter bandwidth \(α\), and the cost value associated with support vectors. To train the model we will use k-fold cross validation, with \(k\) set to 5.

#To train the model we will use k-fold cross validation, with k set to 5

ctrl <- trainControl(method = "cv", number=5) To test (at present, caret only allows \(sigma\) and \(C\) to be trained in this way so we cannot optimise over epsilon automatically) and train the model, a grid of parameters must be created.

# Create a grid of parameters to test and train the model with dimension 1

SVRGridCoarse <- expand.grid(.sigma=c(0.001, 0.01, 0.1), .C=c(10,100,1000))

SVRFitCoarse <- train(XTrain, yTrain, method="svmRadial", tuneGrid=SVRGridCoarse, trControl=ctrl, type="eps-svr")

# Display results

SVRFitCoarse ## Support Vector Machines with Radial Basis Function Kernel

##

## 4143 samples

## 3 predictor

##

## No pre-processing

## Resampling: Cross-Validated (5 fold)

## Summary of sample sizes: 3313, 3315, 3315, 3315, 3314

## Resampling results across tuning parameters:

##

## sigma C RMSE Rsquared MAE

## 0.001 10 0.01855788 0.7449160 0.01395675

## 0.001 100 0.01838884 0.7497854 0.01383987

## 0.001 1000 0.01831466 0.7516992 0.01375272

## 0.010 10 0.01834316 0.7509672 0.01377398

## 0.010 100 0.01841338 0.7491764 0.01381130

## 0.010 1000 0.01844320 0.7484456 0.01383601

## 0.100 10 0.01854439 0.7456133 0.01388359

## 0.100 100 0.01887267 0.7366928 0.01400829

## 0.100 1000 0.01959266 0.7178368 0.01415667

##

## RMSE was used to select the optimal model using the smallest value.

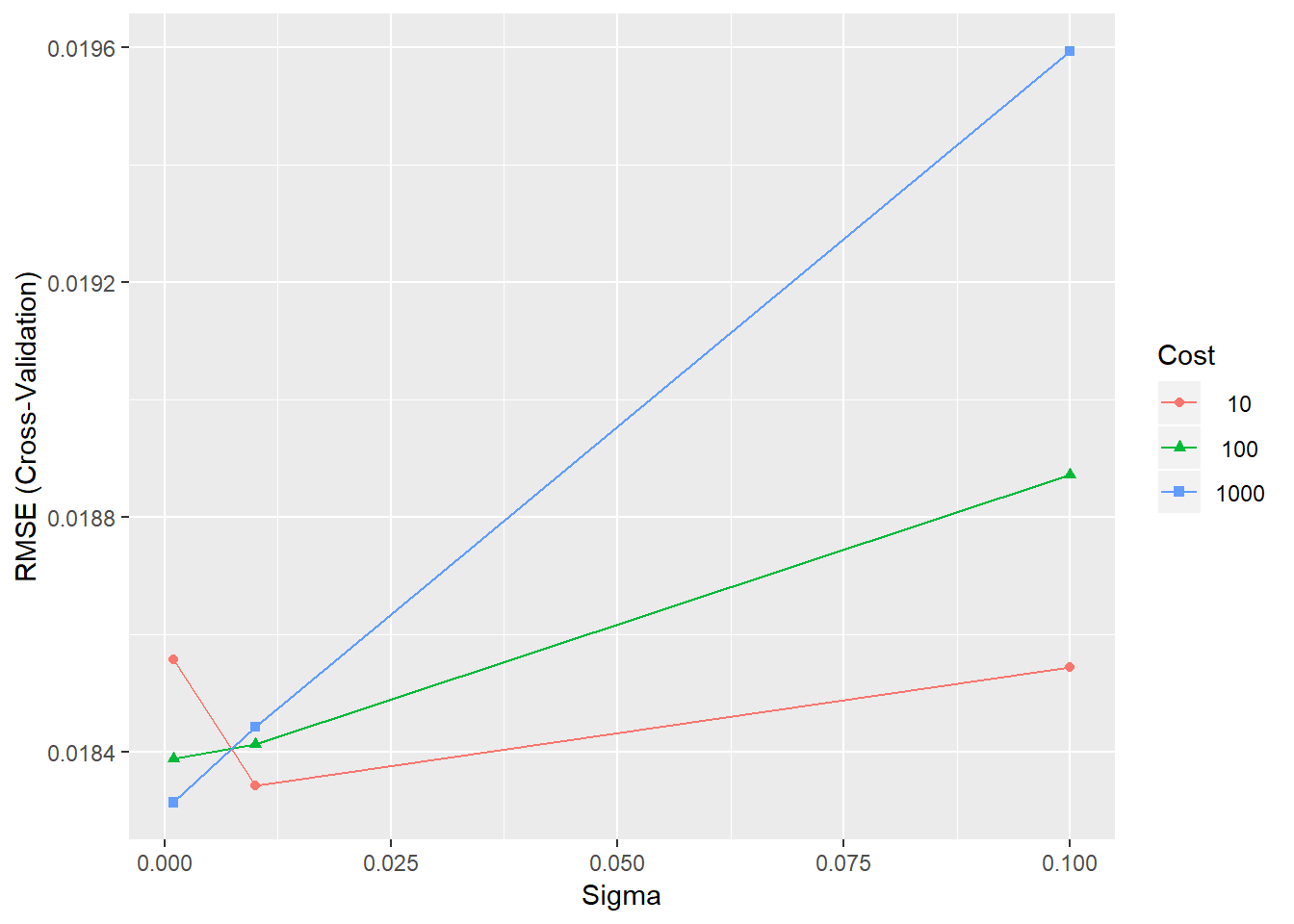

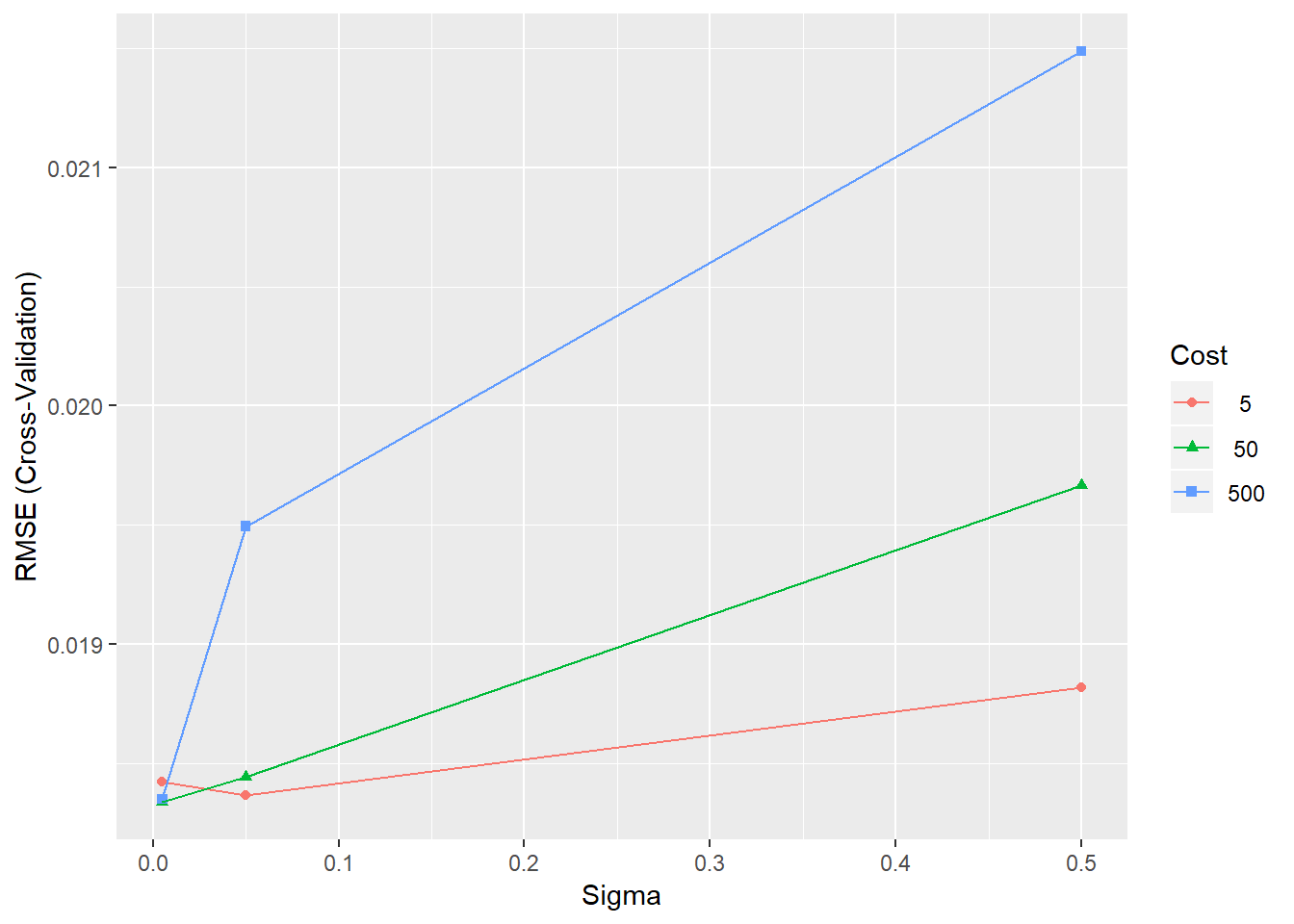

## The final values used for the model were sigma = 0.001 and C = 1000.Results are given for each of the parameter combinations in terms of the root mean squared error (RMSE), R squared and Mean Absolute Error (MAE). We can view the results visually using the ggplot() function, as displayed in figure 4.5.

ggplot(SVRFitCoarse)

Figure 4.5: Training errors for the SVR model with different parameter combinations

The plot shows that the value of sigma=0.1 and C=1000 provide the best model performance. However, we can refine the grid to see if we can gain further improvements in performance.

To test the best performance, we might differentiate the values of the embedding dimension with the three different autoregressive orders have been used: \(q=3\); \(q=4\) and \(q=5\).

# Create a grid of parameters to test and train the model with dimension 2

SVRGridCoarse_d2 <- expand.grid(.sigma=c(0.001, 0.01, 0.1), .C=c(10,100,1000))

SVRFitCoarse_d2 <- train(XTrain_d2, yTrain_d2, method="svmRadial", tuneGrid=SVRGridCoarse, trControl=ctrl, type="eps-svr")

SVRFitCoarse_d2## Support Vector Machines with Radial Basis Function Kernel

##

## 4143 samples

## 4 predictor

##

## No pre-processing

## Resampling: Cross-Validated (5 fold)

## Summary of sample sizes: 3313, 3314, 3314, 3316, 3315

## Resampling results across tuning parameters:

##

## sigma C RMSE Rsquared MAE

## 0.001 10 0.01844992 0.7485321 0.01383254

## 0.001 100 0.01822262 0.7548238 0.01367620

## 0.001 1000 0.01816424 0.7561625 0.01361397

## 0.010 10 0.01819337 0.7551913 0.01363658

## 0.010 100 0.01821017 0.7550758 0.01364336

## 0.010 1000 0.01819567 0.7555293 0.01365335

## 0.100 10 0.01838699 0.7497893 0.01371988

## 0.100 100 0.01867380 0.7415464 0.01388985

## 0.100 1000 0.02000167 0.7053679 0.01426356

##

## RMSE was used to select the optimal model using the smallest value.

## The final values used for the model were sigma = 0.001 and C = 1000.# Create a grid of parameters to test and train the modelwith dimension 3

SVRGridCoarse_d3 <- expand.grid(.sigma=c(0.001, 0.01, 0.1), .C=c(10,100,1000))

SVRFitCoarse_d3 <- train(XTrain_d3, yTrain_d3, method="svmRadial", tuneGrid=SVRGridCoarse, trControl=ctrl, type="eps-svr")

SVRFitCoarse_d3## Support Vector Machines with Radial Basis Function Kernel

##

## 4143 samples

## 5 predictor

##

## No pre-processing

## Resampling: Cross-Validated (5 fold)

## Summary of sample sizes: 3312, 3315, 3315, 3314, 3316

## Resampling results across tuning parameters:

##

## sigma C RMSE Rsquared MAE

## 0.001 10 0.01837042 0.7505541 0.01377700

## 0.001 100 0.01816826 0.7563109 0.01362338

## 0.001 1000 0.01817603 0.7559690 0.01360780

## 0.010 10 0.01822833 0.7545035 0.01363603

## 0.010 100 0.01826660 0.7537413 0.01367489

## 0.010 1000 0.01833533 0.7522191 0.01373710

## 0.100 10 0.01856849 0.7463215 0.01388551

## 0.100 100 0.01933124 0.7263834 0.01423794

## 0.100 1000 0.02172044 0.6724945 0.01492969

##

## RMSE was used to select the optimal model using the smallest value.

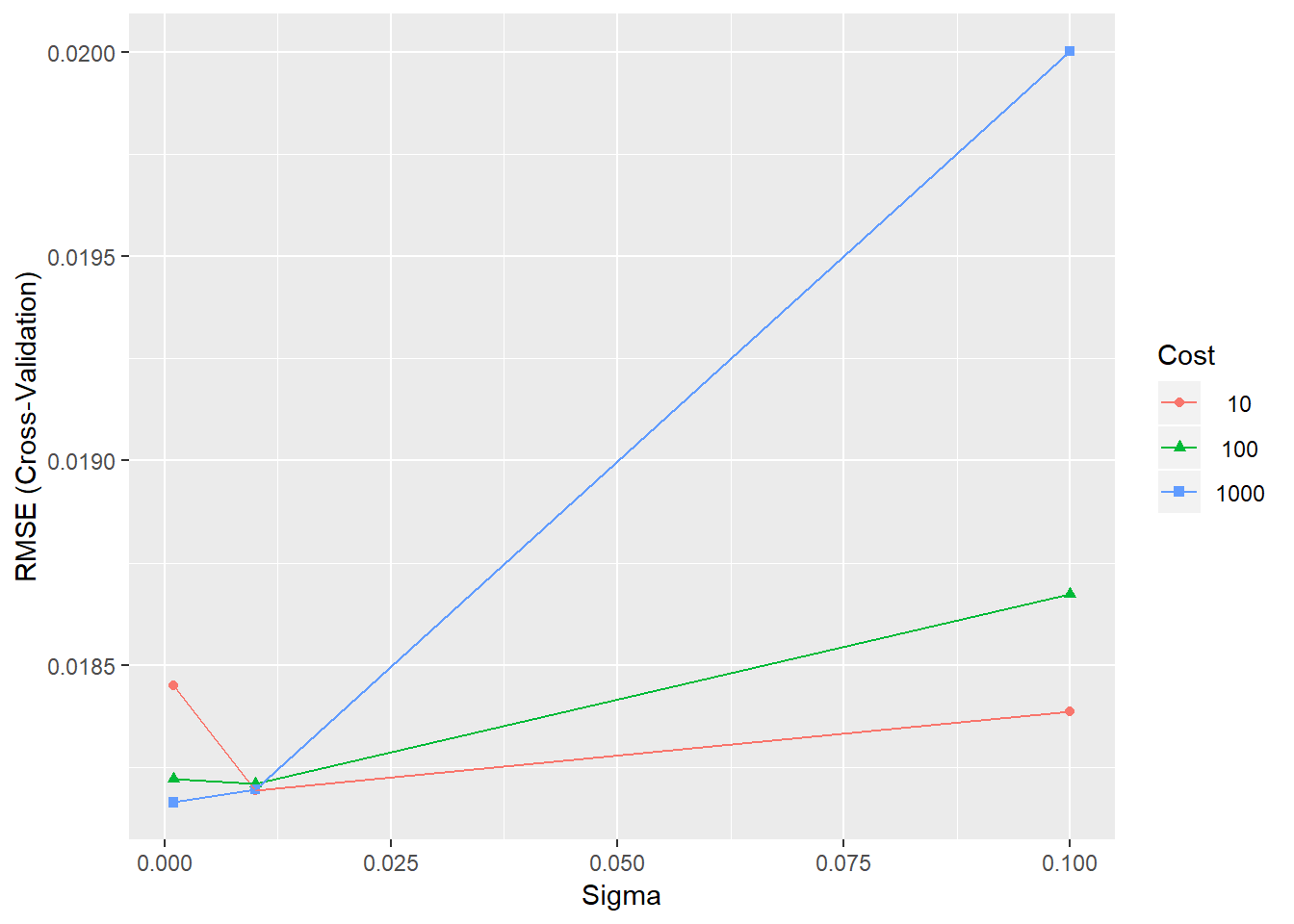

## The final values used for the model were sigma = 0.001 and C = 100.We now can use ggplot() to plot the results.

ggplot(SVRFitCoarse_d2)

ggplot(SVRFitCoarse_d3)

Figure 4.6: Training errors for the SVR model with different parameter combinations

For all dimensions, \(sigma = 0.001\) and \(C = 1000\) present the best outome, whereas \(q = 4\) provides the best fit with \(RMSE = 0.01813995\). However, it should be noted that smaller value of sigma could produce a better result.

We may achieve a better classification if we try to optimise the parameters in some way. There are two parameters to train: the kernel parameter \(sigma\) and the constant \(C\). \(Sigma\) is set automatic by default. The value of \(C\) is not estimated and is arbitrary set to \(a\). Therefore, it is important to test some more values for \(C\).

We can refine the grid to see if we can gain further improvements in performance.

# Testing the grid to see if we can gain further improvements in performance: diferent sigma for d1

SVRGridFine <- expand.grid(.sigma=c(0.005, 0.05, 0.5), .C=c(5,50,500))

system.time(SVRFitFine <- train(XTrain, yTrain, method="svmRadial", tuneGrid=SVRGridFine,

trControl=ctrl, type="eps-svr"))## user system elapsed

## 155.50 4.16 160.37# Display results

SVRFitFine## Support Vector Machines with Radial Basis Function Kernel

##

## 4143 samples

## 3 predictor

##

## No pre-processing

## Resampling: Cross-Validated (5 fold)

## Summary of sample sizes: 3314, 3314, 3314, 3314, 3316

## Resampling results across tuning parameters:

##

## sigma C RMSE Rsquared MAE

## 0.005 5 0.01842091 0.7492711 0.01386240

## 0.005 50 0.01833551 0.7513841 0.01377070

## 0.005 500 0.01834914 0.7509496 0.01376983

## 0.050 5 0.01836352 0.7506480 0.01379302

## 0.050 50 0.01844122 0.7486064 0.01381828

## 0.050 500 0.01949416 0.7230844 0.01400462

## 0.500 5 0.01881759 0.7380230 0.01399943

## 0.500 50 0.01966634 0.7145673 0.01431567

## 0.500 500 0.02148766 0.6668612 0.01488730

##

## RMSE was used to select the optimal model using the smallest value.

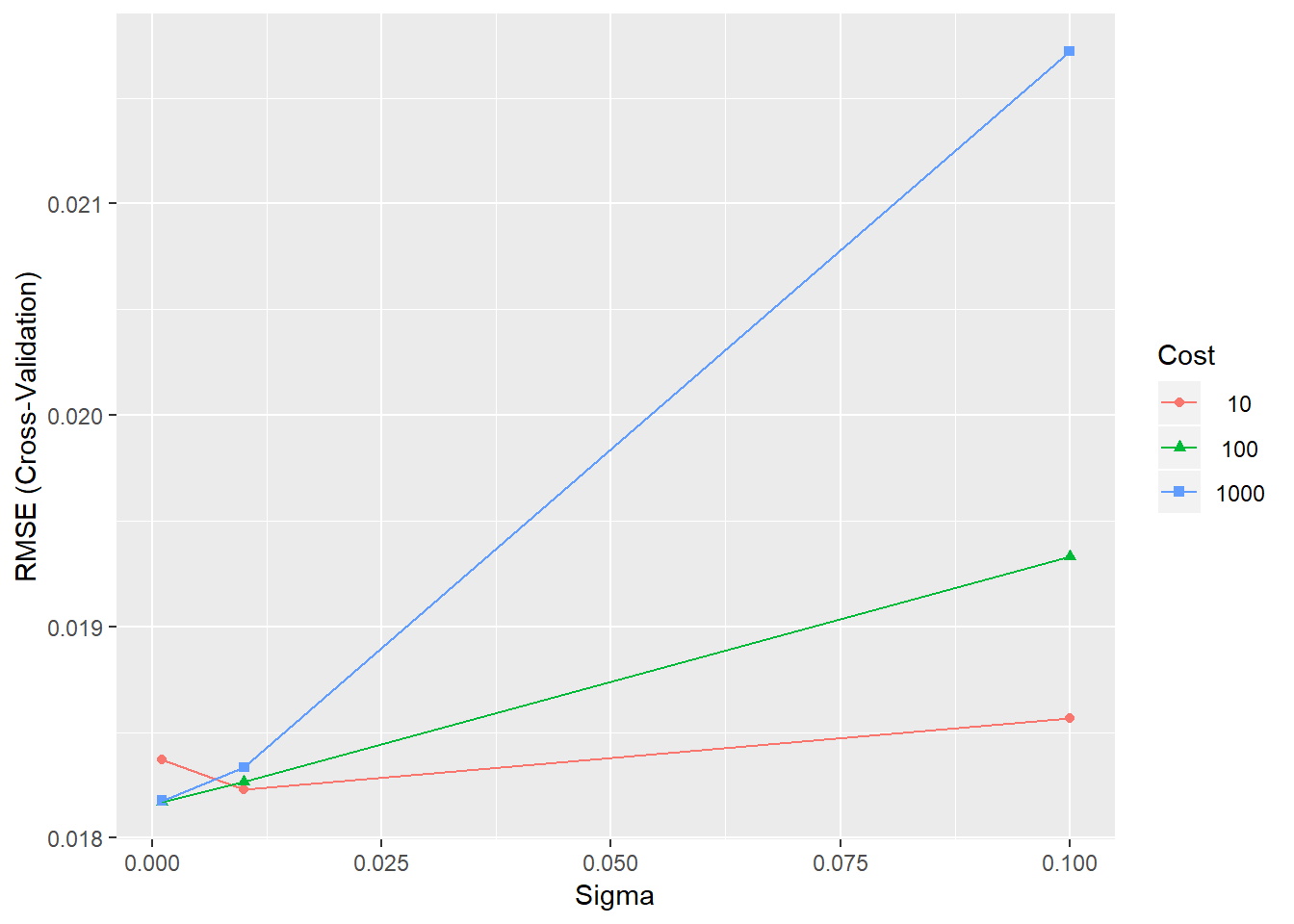

## The final values used for the model were sigma = 0.005 and C = 50.# Plot the results

ggplot(SVRFitFine)

Figure 4.7: Training errors for the SVR model with different parameter combinations

Step 4: Select the model and apply predictive analysis

The effectiveness of a model is commonly measured using a single statistic. For models predicting a numeric outcome, the fitness statistic might be the root mean squared error (RMSE) or the coefficient of determination (r2). For classification, where a discrete outcome is being predicted, the error rate might be an appropriate measure.

Examining the results considering the RMSE index, the best performance can be pointed out for the road link.

The model object for the best model can be accessed by:

SVRFitFine$finalModel## Support Vector Machine object of class "ksvm"

##

## SV type: eps-svr (regression)

## parameter : epsilon = 0.1 cost C = 50

##

## Gaussian Radial Basis kernel function.

## Hyperparameter : sigma = 0.005

##

## Number of Support Vectors : 3359

##

## Objective Function Value : -58828.6

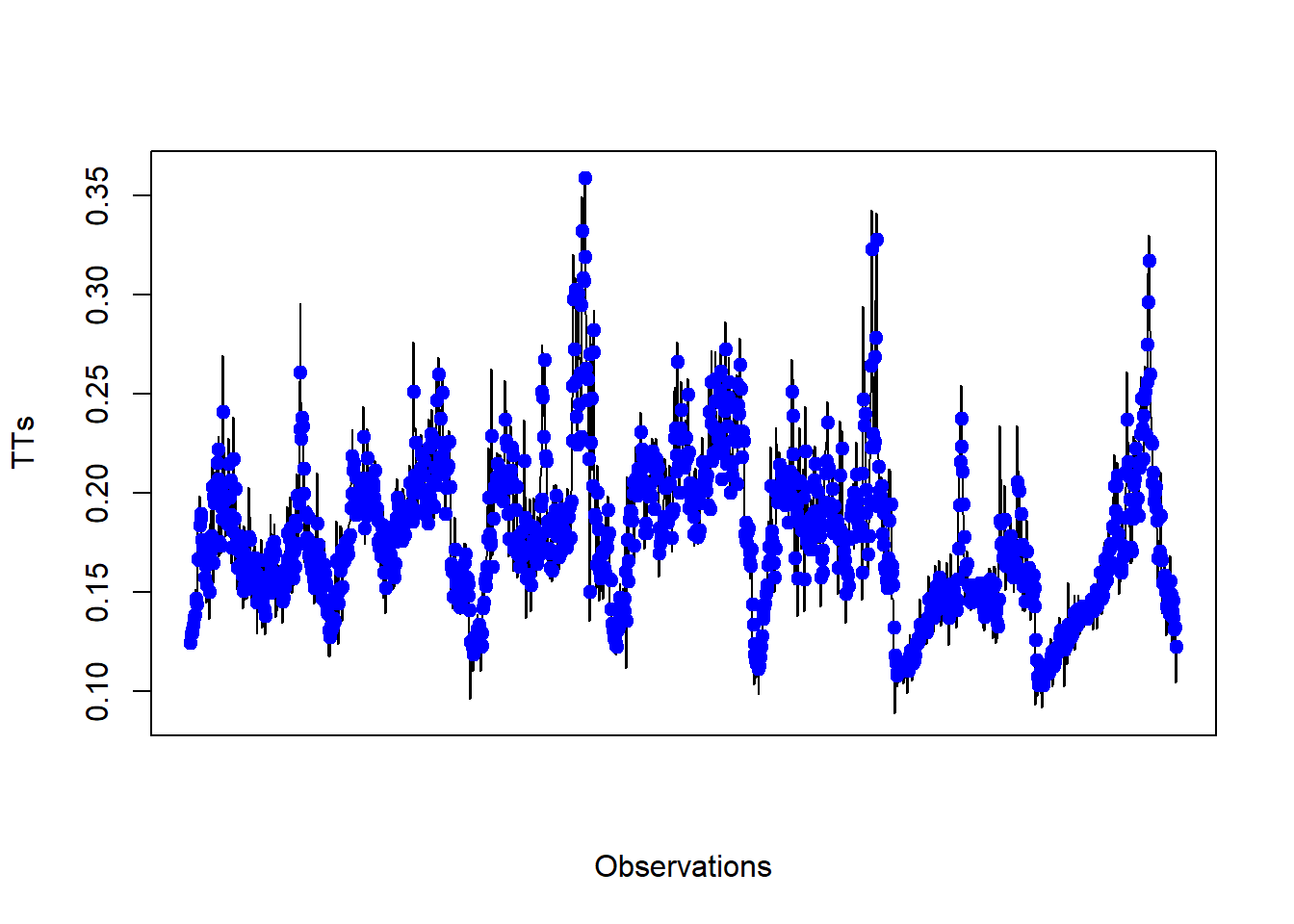

## Training error : 0.247802The model for one-step-ahead prediction can be used to plot the results (figure 4.8). The model forecasts the TTs reasonably well. For road link 2112, the proportion of points used as support vector has been of 62% from the dataset.

# We can use the model for one-step-ahead prediction and plot the results

yPred <- predict(SVRFitCoarse_d2, XTest_d2)

plot(yTest_d2, type="l", xaxt="n", xlab="Observations", ylab="TTs")

points(yPred, col="blue", pch=21, bg="blue")

#axis(1, at=seq(90, (180*5)+90, 180), labels=unique(dates[(split+1):nrow(data),1]))

Figure 4.8: Observed vs predicted TT using an SVR model

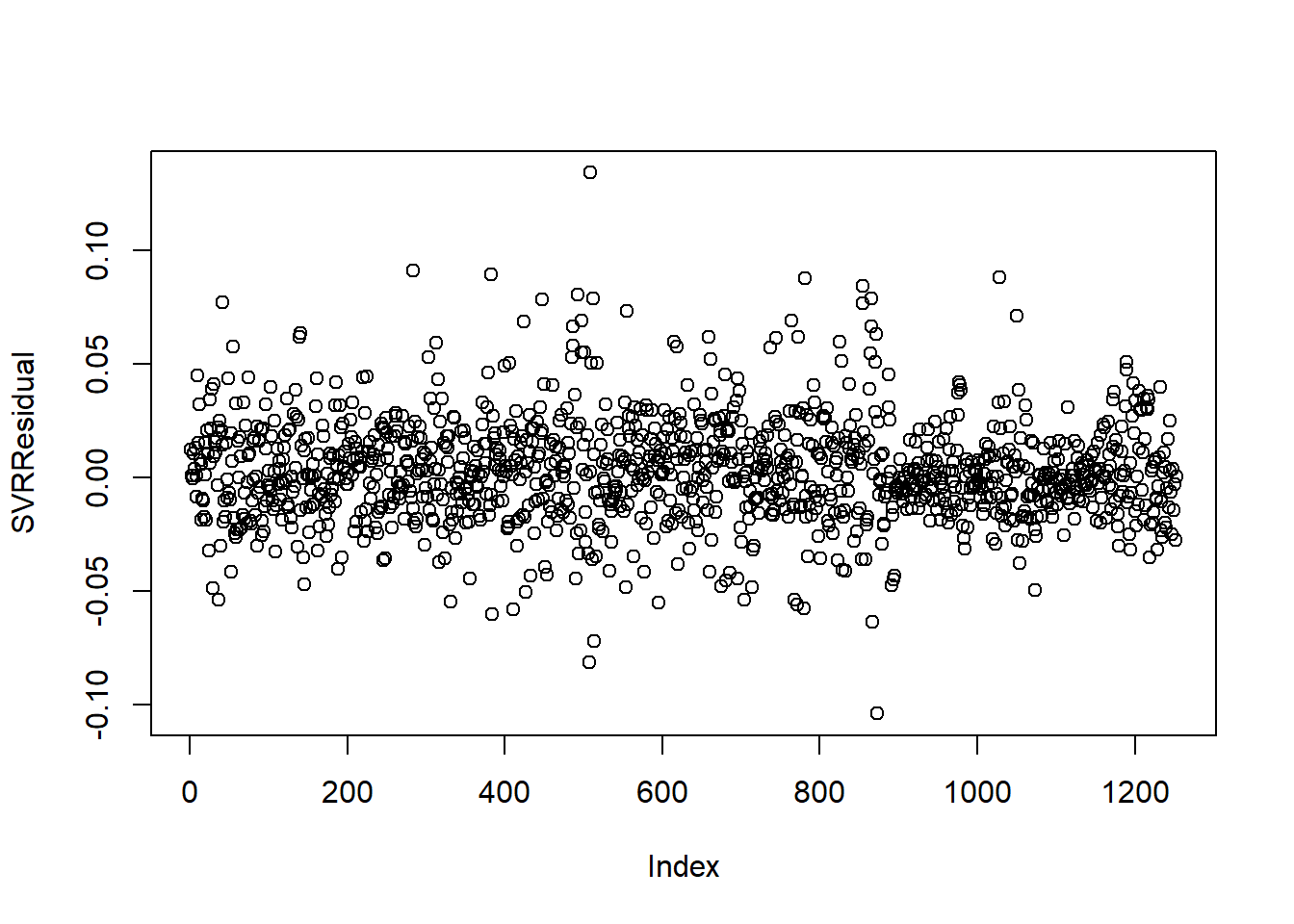

Finally, it is also important to check whether any temporal autocorrelation remains in the residuals of the model. The autocorelation function (ACF) is used in time series analyses.

It is good practice to also check whether any temporal autocorrelation remains in the residuals of the model (figure 4.9)

# Check whether any temporal autocorrelation remains in the residuals of the model

SVRResidual <- yTest_d2-yPred

plot(SVRResidual)

Figure 4.9: Residual autocorrelation for SVR model

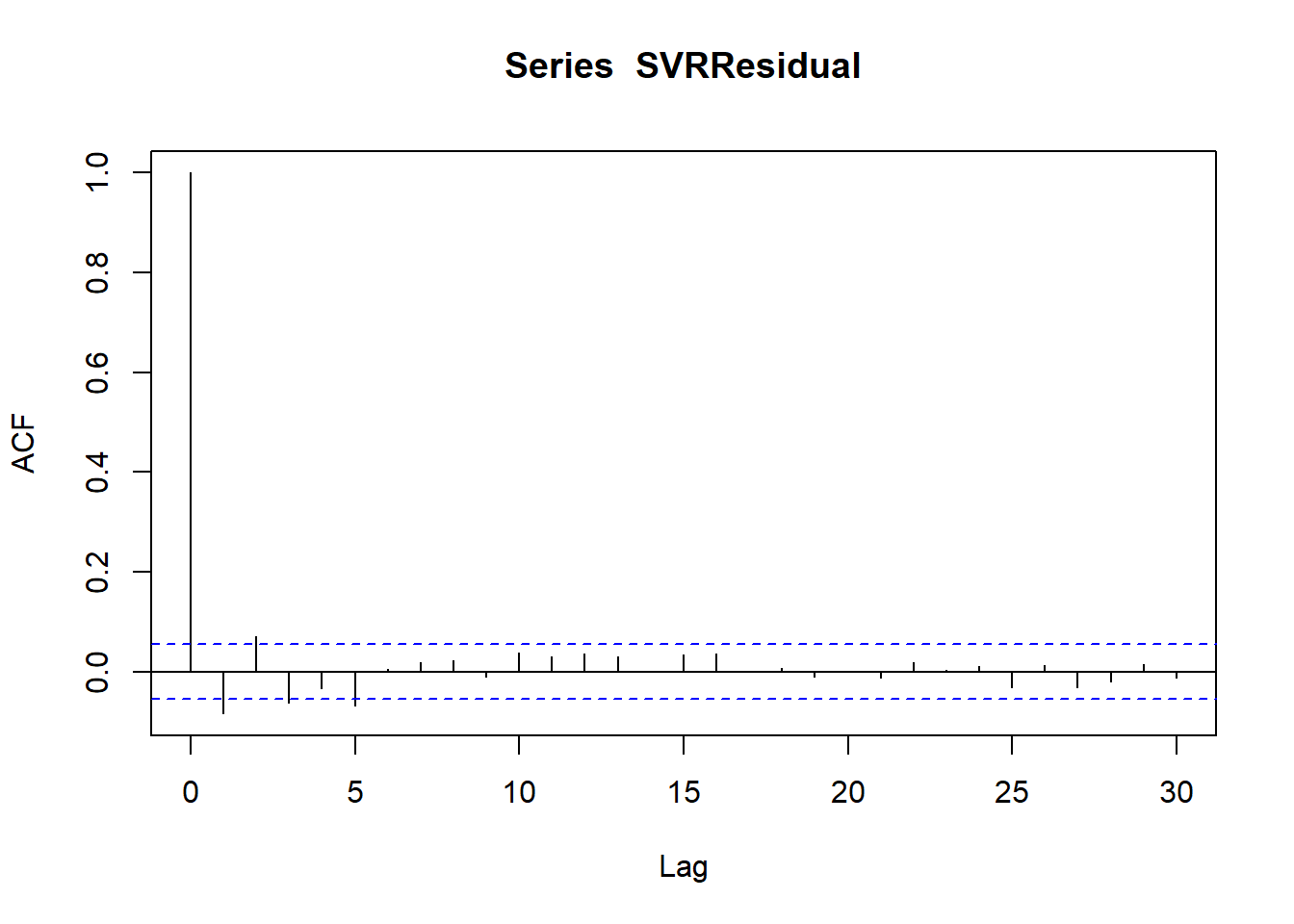

figure 4.10) displays differenced autocorrelation function (ACF) plot for the data of the series. The ACF is usually calculated for a set number of lags. The smaller the lag (separation between observations) the greater the correlation between temperatures. The dashed lines are approximate 95% confident interval for the autocorrelation. It can be notted that no significant overall autocorrelation is presented. Additionally, no seasonal pattern could be noted as well.

# To produce an autocorrelation plot

acf(SVRResidual)

Figure 4.10: Residual autocorrelation for SVR model

4.4 Chapter Summary

The basic idea behind the development of SVMs is the same: for a given learning task, with a given finite number of training data, the best generalization performance will be achieved if the right balance is struck between the accuracy attained on that particular training set, and the ability of the algorithm to predict unseen data samples with a minimum of errors.

Finally, it is worth mentioning that the experiment applies time series only. It is possible to create a space-time model using the spatial weight matrix but since the spatio-temporal autocorrelation is weaker than the temporal autocorrelation (as previously discussed on the study), the model has not been applied.

knitr::opts_chunk$set(error = TRUE)References

Burges, C. J. 1998. “A Tutorial on Support Vector Machines for Pattern Recognition.” Data Mining and Knowledge Discovery 2(2): 121–67.

Cheng, T, and J. Haworth. 2019. “Spatio-Temporal Data Analysis and Big Data Mining.” University College London.

Cortes, C., and V. Vapnik. 1995. “Support-Vector Networks.” Machine Learning 20(3): 273–97.

Wu, Ho, C. H., and D. T. Lee. 2004. “Travel-Time Prediction with Support Vector Regression.” IEEE Transactions on Intelligent Transportation Systems 5(4): 276–81.

SVR has greater generalization ability and guarantee global minima for a given training data.↩

For more information, see http://topepo.github.io/caret/index.html↩

Other common resampling methods are leave-one-out cross-validation, Monte Carlo cross-validation and the bootstrap↩

The optimal model is selected based on accuracy statistics.↩